You’re out of free articles.

Log in

To continue reading, log in to your account.

Create a Free Account

To unlock more free articles, please create a free account.

Sign In or Create an Account.

By continuing, you agree to the Terms of Service and acknowledge our Privacy Policy

Welcome to Heatmap

Thank you for registering with Heatmap. Climate change is one of the greatest challenges of our lives, a force reshaping our economy, our politics, and our culture. We hope to be your trusted, friendly, and insightful guide to that transformation. Please enjoy your free articles. You can check your profile here .

subscribe to get Unlimited access

Offer for a Heatmap News Unlimited Access subscription; please note that your subscription will renew automatically unless you cancel prior to renewal. Cancellation takes effect at the end of your current billing period. We will let you know in advance of any price changes. Taxes may apply. Offer terms are subject to change.

Subscribe to get unlimited Access

Hey, you are out of free articles but you are only a few clicks away from full access. Subscribe below and take advantage of our introductory offer.

subscribe to get Unlimited access

Offer for a Heatmap News Unlimited Access subscription; please note that your subscription will renew automatically unless you cancel prior to renewal. Cancellation takes effect at the end of your current billing period. We will let you know in advance of any price changes. Taxes may apply. Offer terms are subject to change.

Create Your Account

Please Enter Your Password

Forgot your password?

Please enter the email address you use for your account so we can send you a link to reset your password:

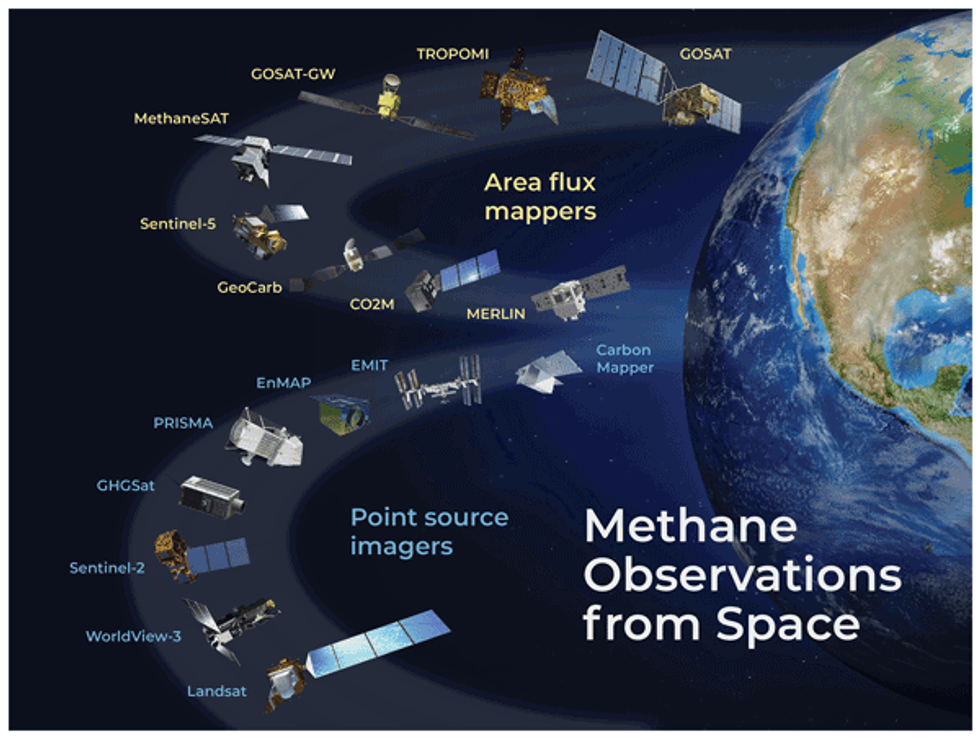

Over a dozen methane satellites are now circling the Earth — and more are on the way.

On Monday afternoon, a satellite the size of a washing machine hitched a ride on a SpaceX rocket and was launched into orbit. MethaneSAT, as the new satellite is called, is the latest to join more than a dozen other instruments currently circling the Earth monitoring emissions of the ultra-powerful greenhouse gas methane. But it won’t be the last. Over the next several months, at least two additional methane-detecting satellites from the U.S. and Japan are scheduled to join the fleet.

There’s a joke among scientists that there are so many methane-detecting satellites in space that they are reducing global warming — not just by providing essential data about emissions, but by blocking radiation from the sun.

So why do we keep launching more?

Despite the small army of probes in orbit, and an increasingly large fleet of methane-detecting planes and drones closer to the ground, our ability to identify where methane is leaking into the atmosphere is still far too limited. Like carbon dioxide, sources of methane around the world are numerous and diffuse. They can be natural, like wetlands and oceans, or man-made, like decomposing manure on farms, rotting waste in landfills, and leaks from oil and gas operations.

There are big, unanswered questions about methane, about which sources are driving the most emissions, and consequently, about tackling climate change, that scientists say MethaneSAT will help solve. But even then, some say we’ll need to launch even more instruments into space to really get to the bottom of it all.

Measuring methane from space only began in 2009 with the launch of the Greenhouse Gases Observing Satellite, or GOSAT, by Japan’s Aerospace Exploration Agency. Previously, most of the world’s methane detectors were on the ground in North America. GOSAT enabled scientists to develop a more geographically diverse understanding of major sources of methane to the atmosphere.

Soon after, the Environmental Defense Fund, which led the development of MethaneSAT, began campaigning for better data on methane emissions. Through its own, on-the-ground measurements, the group discovered that the Environmental Protection Agency’s estimates of leaks from U.S. oil and gas operations were totally off. EDF took this as a call to action. Because methane has such a strong warming effect, but also breaks down after about a decade in the atmosphere, curbing methane emissions can slow warming in the near-term.

“Some call it the low hanging fruit,” Steven Hamburg, the chief scientist at EDF leading the MethaneSAT project, said during a press conference on Friday. “I like to call it the fruit lying on the ground. We can really reduce those emissions and we can do it rapidly and see the benefits.”

But in order to do that, we need a much better picture than what GOSAT or other satellites like it can provide.

In the years since GOSAT launched, the field of methane monitoring has exploded. Today, there are two broad categories of methane instruments in space. Area flux mappers, like GOSAT, take global snapshots. They can show where methane concentrations are generally higher, and even identify exceptionally large leaks — so-called “ultra-emitters.” But the vast majority of leaks, big and small, are invisible to these instruments. Each pixel in a GOSAT image is 10 kilometers wide. Most of the time, there’s no way to zoom into the picture and see which facilities are responsible.

Point source imagers, on the other hand, take much smaller photos that have much finer resolution, with pixel sizes down to just a few meters wide. That means they provide geographically limited data — they have to be programmed to aim their lenses at very specific targets. But within each image is much more actionable data.

For example, GHGSat, a private company based in Canada, operates a constellation of 12 point-source satellites, each one about the size of a microwave oven. Oil and gas companies and government agencies pay GHGSat to help them identify facilities that are leaking. Jean-Francois Gauthier, the director of business development at GHGSat, told me that each image taken by one of their satellites is 12 kilometers wide, but the resolution for each pixel is 25 meters. A snapshot of the Permian Basin, a major oil and gas producing region in Texas, might contain hundreds of oil and gas wells, owned by a multitude of companies, but GHGSat can tell them apart and assign responsibility.

“We’ll see five, 10, 15, 20 different sites emitting at the same time and you can differentiate between them,” said Gauthier. “You can see them very distinctly on the map and be able to say, alright, that’s an unlit flare, and you can tell which company it is, too.” Similarly, GHGSat can look at a sprawling petrochemical complex and identify the exact tank or pipe that has sprung a leak.

But between this extremely wide-angle lens, and the many finely-tuned instruments pointing at specific targets, there’s a gap. “It might seem like there’s a lot of instruments in space, but we don’t have the kind of coverage that we need yet, believe it or not,” Andrew Thorpe, a research technologist at NASA’s Jet Propulsion Laboratory told me. He has been working with the nonprofit Carbon Mapper on a new constellation of point source imagers, the first of which is supposed to launch later this year.

The reason why we don’t have enough coverage has to do with the size of the existing images, their resolution, and the amount of time it takes to get them. One of the challenges, Thorpe said, is that it’s very hard to get a continuous picture of any given leak. Oil and gas equipment can spring leaks at random. They can leak continuously or intermittently. If you’re just getting a snapshot every few weeks, you may not be able to tell how long a leak lasted, or you might miss a short but significant plume. Meanwhile, oil and gas fields are also changing on a weekly basis, Joost de Gouw, an atmospheric chemist at the University of Colorado, Boulder, told me. New wells are being drilled in new places — places those point-source imagers may not be looking at.

“There’s a lot of potential to miss emissions because we’re not looking,” he said. “If you combine that with clouds — clouds can obscure a lot of our observations — there are still going to be a lot of times when we’re not actually seeing the methane emissions.”

De Gouw hopes MethaneSAT will help resolve one of the big debates about methane leaks. Between the millions of sites that release small amounts of methane all the time, and the handful of sites that exhale massive plumes infrequently, which is worse? What fraction of the total do those bigger emitters represent?

Paul Palmer, a professor at the University of Edinburgh who studies the Earth’s atmospheric composition, is hopeful that it will help pull together a more comprehensive picture of what’s driving changes in the atmosphere. Around the turn of the century, methane levels pretty much leveled off, he said. But then, around 2007, they started to grow again, and have since accelerated. Scientists have reached different conclusions about why.

“There’s lots of controversy about what the big drivers are,” Palmer told me. Some think it’s related to oil and gas production increasing. Others — and he’s in this camp — think it’s related to warming wetlands. “Anything that helps us would be great.”

MethaneSAT sits somewhere between the global mappers and point source imagers. It will take larger images than GHGSat, each one 200 kilometers wide, which means it will be able to cover more ground in a single day. Those images will also contain finer detail about leaks than GOSAT, but they won’t necessarily be able to identify exactly which facilities the smaller leaks are coming from. Also, unlike with GHGSat, MethaneSAT’s data will be freely available to the public.

EDF, which raised $88 million for the project and spent nearly a decade working on it, says that one of MethaneSAT’s main strengths will be to provide much more accurate basin-level emissions estimates. That means it will enable researchers to track the emissions of the entire Permian Basin over time, and compare it with other oil and gas fields in the U.S. and abroad. Many countries and companies are making pledges to reduce their emissions, and MethaneSAT will provide data on a relevant scale that can help track progress, Maryann Sargent, a senior project scientist at Harvard University who has been working with EDF on MethaneSAT, told me.

It could also help the Environmental Protection Agency understand whether its new methane regulations are working. It could help with the development of new standards for natural gas being imported into Europe. At the very least, it will help oil and gas buyers differentiate between products associated with higher or lower methane intensities. It will also enable fossil fuel companies who measure their own methane emissions to compare their performance to regional averages.

MethaneSAT won’t be able to look at every source of methane emissions around the world. The project is limited by how much data it can send back to Earth, so it has to be strategic. Sargent said they are limiting data collection to 30 targets per day, and in the near term, those will mostly be oil and gas producing regions. They aim to map emissions from 80% of global oil and gas production in the first year. The outcome could be revolutionary.

“We can look at the entire sector with high precision and track those emissions, quantify them and track them over time. That’s a first for empirical data for any sector, for any greenhouse gas, full stop,” Hamburg told reporters on Friday.

But this still won’t be enough, said Thorpe of NASA. He wants to see the next generation of instruments start to look more closely at natural sources of emissions, like wetlands. “These types of emissions are really, really important and very poorly understood,” he said. “So I think there’s a heck of a lot of potential to work towards the sectors that have been really hard to do with current technologies.”

Log in

To continue reading, log in to your account.

Create a Free Account

To unlock more free articles, please create a free account.

It’s either reassure investors now or reassure voters later.

Investor-owned utilities are a funny type of company. On the one hand, they answer to their shareholders, who expect growing returns and steady dividends. But those returns are the outcome of an explicitly political process — negotiations with state regulators who approve the utilities’ requests to raise rates and to make investments, on which utilities earn a rate of return that also must be approved by regulators.

Utilities have been requesting a lot of rate increases — some $31 billion in 2025, according to the energy policy group PowerLines, more than double the amount requested the year before. At the same time, those rate increases have helped push electricity prices up over 6% in the last year, while overall prices rose just 2.4%.

Unsurprisingly, people have noticed, and unsurprisingly, politicians have responded. (After all, voters are most likely to blame electric utilities and state governments for rising electricity prices, Heatmap polling has found.) Democrat Mikie Sherrill, for instance, won the New Jersey governorship on the back of her proposal to freeze rates in the state, which has seen some of the country’s largest rate increases.

This puts utilities in an awkward position. They need to boast about earnings growth to their shareholders while also convincing Wall Street that they can avoid becoming punching bags in state capitols.

Make no mistake, the past year has been good for these companies and their shareholders. Utilities in the S&P 500 outperformed the market as a whole, and had largely good news to tell investors in the past few weeks as they reported their fourth quarter and full-year earnings. Still, many utility executives spent quite a bit of time on their most recent earnings calls talking about how committed they are to affordability.

When Exelon — which owns several utilities in PJM Interconnection, the country’s largest grid and ground zero for upset over the influx data centers and rising rates — trumpeted its growing rate base, CEO Calvin Butler argued that this “steady performance is a direct result of a continued focus on affordability.”

But, a Wells Fargo analyst cautioned, there is a growing number of “affordability things out there,” as they put it, “whether you are looking at Maryland, New Jersey, Pennsylvania, Delaware.” To name just one, Pennsylvania Governor Josh Shapiro said in a speech earlier this month that investor-owned utilities “make billions of dollars every year … with too little public accountability or transparency.” Pennsylvania’s Exelon-owned utility, PECO, won approval at the end of 2024 to hike rates by 10%.

When asked specifically about its regulatory strategy in Pennsylvania and when it intended to file a new rate case, Butler said that, “with affordability front and center in all of our jurisdictions, we lean into that first,” but cautioned that “we also recognize that we have to maintain a reliable and resilient grid.” In other words, Exelon knows that it’s under the microscope from the public.

Butler went on to neatly lay out the dilemma for utilities: “Everything centers on affordability and maintaining a reliable system,” he said. Or to put it slightly differently: Rate increases are justified by bolstering reliability, but they’re often opposed by the public because of how they impact affordability.

Of the large investor-owned utilities, it was probably Duke Energy, which owns electrical utilities in the Carolinas, Florida, Kentucky, Indiana, and Ohio, that had to most carefully navigate the politics of higher rates, assuring Wall Street over and over how committed it was to affordability. “We will never waver on our commitment to value and affordability,” Duke chief executive Harry Sideris said on the company’s February 10 earnings call.

In November, Duke requested a $1.7 billion revenue increase over the course of 2027 and 2028 for two North Carolina utilities, Duke Energy Carolinas and Duke Energy Progress — a 15% hike. The typical residential customer Duke Energy Carolinas customer would see $17.22 added onto their monthly bill in 2027, while Duke Energy Progress ratepayers would be responsible for $23.11 more, with smaller increases in 2028.

These rate cases come “amid acute affordability scrutiny, making regulatory outcomes the decisive variable for the earnings trajectory,” Julien Dumoulin-Smith, an analyst at Jefferies, wrote in a note to clients. In other words, in order to continue to grow earnings, Duke needs to convince regulators and a skeptical public that the rate increases are necessary.

“Our customers remain our top priority, and we will never waver on our commitment to value and affordability,” Sideris told investors. “We continue to challenge ourselves to find new ways to deliver affordable energy for our customers.”

All in all, “affordability” and “affordable” came up 15 times on the call. A year earlier, they came up just three times.

When asked by a Jefferies analyst about how Duke could hit its forecasted earnings growth through 2029, Sideris zeroed in on the regulatory side: “We are very confident in our regulatory outcomes,” he said.

At the same time, Duke told investors that it planned to increase its five-year capital spending plan to $103 billion — “the largest fully regulated capital plan in the industry,” Sideris said.

As far as utilities are concerned, with their multiyear planning and spending cycles, we are only at the beginning of the affordability story.

“The 2026 utility narrative is shifting from ‘capex growth at all costs’ to ‘capex growth with a customer permission slip,’” Dumoulin-Smith wrote in a separate note on Thursday. “We believe it is no longer enough for utilities to say they care about affordability; regulators and investors are demanding proof of proactive behavior.”

If they can’t come up with answers that satisfy their investors, ultimately they’ll have to answer to the voters. Last fall, two Republican utility regulators in Georgia lost their reelection bids by huge margins thanks in part to a backlash over years of rate increases they’d approved.

“Especially as the November 2026 elections approach, utilities that fail to demonstrate concrete mitigants face political and reputational risk and may warrant a credibility discount in valuations, in our view,” Dumoulin wrote.

At the same time, utilities are dealing with increased demand for electricity, which almost necessarily means making more investments to better serve that new load, which can in the short turn translate to higher prices. While large technology companies and the White House are making public commitments to shield existing customers from higher costs, utility rates are determined in rate cases, not in press releases.

“As the issue of rising utility bills has become a greater economic and political concern, investors are paying attention,” Charles Hua, the founder and executive director of PowerLines, told me. “Rising utility bills are impacting the investor landscape just as they have reshaped the political landscape.”

Plus more of the week’s top fights in data centers and clean energy.

1. Osage County, Kansas – A wind project years in the making is dead — finally.

2. Franklin County, Missouri – Hundreds of Franklin County residents showed up to a public meeting this week to hear about a $16 billion data center proposed in Pacific, Missouri, only for the city’s planning commission to announce that the issue had been tabled because the developer still hadn’t finalized its funding agreement.

3. Hood County, Texas – Officials in this Texas County voted for the second time this month to reject a moratorium on data centers, citing the risk of litigation.

4. Nantucket County, Massachusetts – On the bright side, one of the nation’s most beleaguered wind projects appears ready to be completed any day now.

Talking with Climate Power senior advisor Jesse Lee.

For this week's Q&A I hopped on the phone with Jesse Lee, a senior advisor at the strategic communications organization Climate Power. Last week, his team released new polling showing that while voters oppose the construction of data centers powered by fossil fuels by a 16-point margin, that flips to a 25-point margin of support when the hypothetical data centers are powered by renewable energy sources instead.

I was eager to speak with Lee because of Heatmap’s own polling on this issue, as well as President Trump’s State of the Union this week, in which he pitched Americans on his negotiations with tech companies to provide their own power for data centers. Our conversation has been lightly edited for length and clarity.

What does your research and polling show when it comes to the tension between data centers, renewable energy development, and affordability?

The huge spike in utility bills under Trump has shaken up how people perceive clean energy and data centers. But it’s gone in two separate directions. They see data centers as a cause of high utility prices, one that’s either already taken effect or is coming to town when a new data center is being built. At the same time, we’ve seen rising support for clean energy.

As we’ve seen in our own polling, nobody is coming out looking golden with the public amidst these utility bill hikes — not Republicans, not Democrats, and certainly not oil and gas executives or data center developers. But clean energy comes out positive; it’s viewed as part of the solution here. And we’ve seen that even in recent MAGA polls — Kellyanne Conway had one; Fabrizio, Lee & Associates had one; and both showed positive support for large-scale solar even among Republicans and MAGA voters. And it’s way high once it’s established that they’d be built here in America.

A year or two ago, if you went to a town hall about a new potential solar project along the highway, it was fertile ground for astroturf folks to come in and spread flies around. There wasn’t much on the other side — maybe there was some talk about local jobs, but unemployment was really low, so it didn’t feel super salient. Now there’s an energy affordability crisis; utility bills had been stable for 20 years, but suddenly they’re not. And I think if you go to the town hall and there’s one person spewing political talking points that they've been fed, and then there’s somebody who says, “Hey, man, my utility bills are out of control, and we have to do something about it,” that’s the person who’s going to win out.

The polling you’ve released shows that 52% of people oppose data center construction altogether, but that there’s more limited local awareness: Only 45% have heard about data center construction in their own communities. What’s happening here?

There’s been a fair amount of coverage of [data center construction] in the press, but it’s definitely been playing catch-up with the electric energy the story has on social media. I think many in the press are not even aware of the fiasco in Memphis over Elon Musk’s natural gas plant. But people have seen the visuals. I mean, imagine a little farmhouse that somebody bought, and there’s a giant, 5-mile-long building full of computers next to it. It’s got an almost dystopian feel to it. And then you hear that the building is using more electricity than New York City.

The big takeaway of the poll for me is that coal and natural gas are an anchor on any data center project, and reinforce the worst fears about it. What you see is that when you attach clean energy [to a data center project], it actually brings them above the majority of support. It’s not just paranoia: We are seeing the effects on utility rates and on air pollution — there was a big study just two days ago on the effects of air pollution from data centers. This is something that people in rural, urban, or suburban communities are hearing about.

Do you see a difference in your polling between natural gas-powered and coal-powered data centers? In our own research, coal is incredibly unpopular, but voters seem more positive about natural gas. I wonder if that narrows the gap.

I think if you polled them individually, you would see some distinction there. But again, things like the Elon Musk fiasco in Memphis have circulated, and people are aware of the sheer volume of power being demanded. Coal is about the dirtiest possible way you can do it. But if it’s natural gas, and it’s next door all the time just to power these computers — that’s not going to be welcome to people.

I'm sure if you disentangle it, you’d see some distinction, but I also think it might not be that much. I’ll put it this way: If you look at the default opposition to data centers coming to town, it’s not actually that different from just the coal and gas numbers. Coal and gas reinforce the default opposition. The big difference is when you have clean energy — that bumps it up a lot. But if you say, “It’s a data center, but what if it were powered by natural gas?” I don’t think that would get anybody excited or change their opinion in a positive way.

Transparency with local communities is key when it comes to questions of renewable buildout, affordability, and powering data centers. What is the message you want to leave people with about Climate Power’s research in this area?

Contrary to this dystopian vision of power, people do have control over their own destinies here. If people speak out and demand that data centers be powered by clean energy, they can get those data centers to commit to it. In the end, there’s going to be a squeeze, and something is going to have to give in terms of Trump having his foot on the back of clean energy — I think something will give.

Demand transparency in terms of what kind of pollution to expect. Demand transparency in terms of what kind of power there’s going to be, and if it’s not going to be clean energy, people are understandably going to oppose it and make their voices heard.